File Fixity & Data Integrity

Summary/Best Practices

Being able to attest to the fixity and integrity of the materials an organization is to preserve is essential. Verifying a file’s fixity is typically accomplished by generating, recording, and monitoring a checksum. Generating a checksum is a process that maps a file’s data to a bit string of a fixed size in the form of a cryptographic hash. This hash is stored and compared during file movements and can be used to verify the integrity of files in a storage environment. Checksums are important in that they establish a chain of trust between the object, the file system, and the user.

Step by Step

Level 0 to Level 1

- Check file fixity on ingest if it has been provided with the content

- Create fixity info if it wasn’t provided with the content

If fixity information does not exist for a file, it is important to establish that trust level from this point forward. Creating fixity information for a file can be accomplished a number of ways using tools ranging from simple user-interface based software to comprehensive command-line interface programs.

It is recommended to verify fixity upon ingest into your repository or movement into your system. This can only be done if fixity information is provided for the content. It is essentially taking the existing hash value and comparing it to a second hash value generated either after a file is moved or after a period of time has passed. Hash values that are exactly the same indicate that no changes, intentional or accidental, have been made to the file.

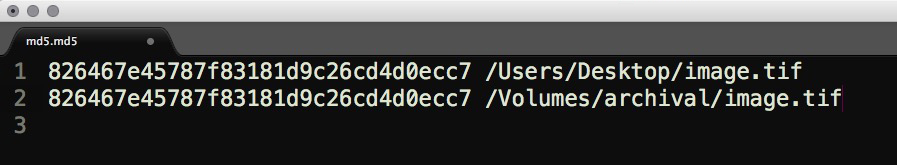

Verifying MD5 checksums

Fixity tools:

Command-line:

- Library of Congress BagIt (Java)

- Library of Congress BagIt (Python)

Cross-platform GUI:

Windows GUI:

Mac GUI:

Level 1 to Level 2

- Check fixity on all ingests

- Use write-blockers when working with original media

- Virus-check high risk content

Moving from level 1 to level 2 in the framework means that, in addition to actively creating and verifying fixity for all ingested content, you are selectively using write protection and virus checks as you ingest born-digital files into your system(s).

Employing write protections means that you are using a computer hard disk controller (write-blocker) made for the purpose of gaining read-only access to computer hard drives/media without damaging the contents or inadvertently altering the metadata. Changes can be made at the time of connection even if you do not issue an explicit command or action within the operating system. Popular manufacturers include Tableau and Wiebetech.

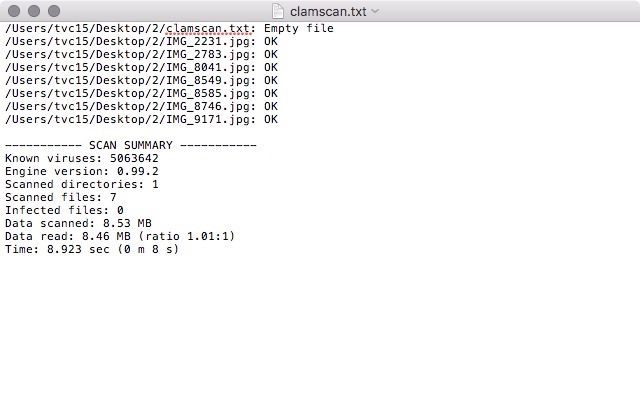

It is always important to scan all born-digital content for viruses no matter where the files originate. This is typically done just after the transfer process so that the source files are not altered. ClamAV is a popular open source antivirus software being used in libraries and archival organizations. The software is part of the "stack" in the Hydra Project, is an integral part of BitCurator, and is a core microservices within the popular digital preservation system Archivematica.

Virus scan log file

Level 2 to Level 3

- Check fixity of content at fixed intervals

- Maintain logs of fixity info; supply audit on demand

- Ability to detect corrupt data - Virus-check all content

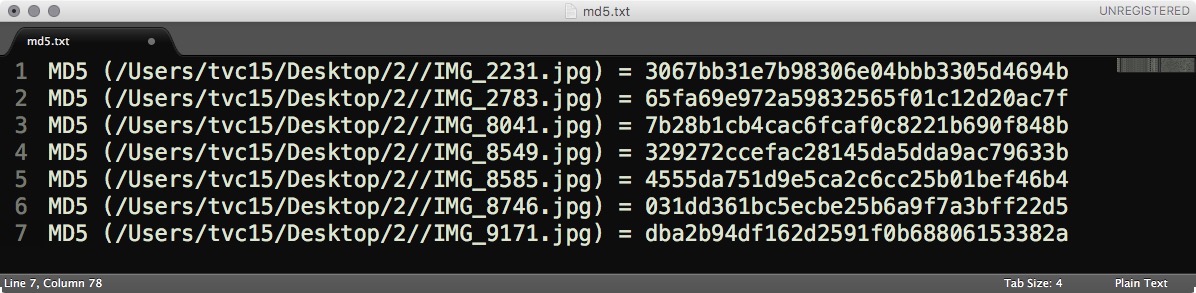

Moving from level 2 to level 3 in the framework requires that you continue performing fixity checks upon ingest but also check content periodically. There are a variety of systems and approaches to regularly checking object fixity. Fixity could be checked monthly, quarterly, or yearly. The more often you check, the more likely you are to detect and repair errors. Most fixity checking software creates file-level or folder-level log files that can be used for directory or project audits. These are simply plain text documents with a list of files and their checksums.

Once you have generated baseline fixity information for files or objects, comparing that information with future fixity check information will tell you if a file has changed or been corrupted. This fixity information can be used to support the repair of data that has been damaged.

Virus-checking should be a required component of all digital object accessioning at this level going forward. An appropriate quarantine policy should also be developed to deal with infected or suspect files.

Folder-level MD5 checksum log file

Level 3 to Level 4

- Check fixity of all content in response to specific events or activities

- Ability to replace/repair corrupted data

- Ensure no one person has write access to all copies

In addition to checking fixity during ingests/transfers and on a regular schedule, it is also recommended to check fixity during the production or digitization processes. Files may move through a number of workstations depending on the level of quality control and additional processes in place. File movement fixity checks ensure that files are transferred intact and unchanged. It is also important to check the fixity of content in response to specific events such as hardware failure or security breaches.

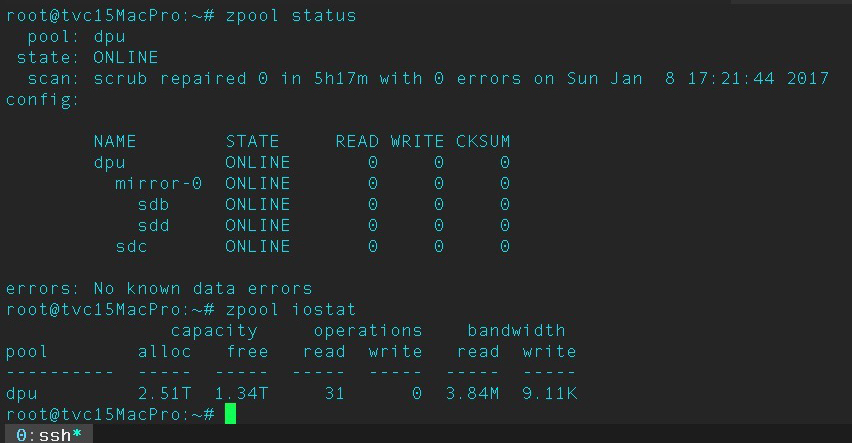

Some file systems such as ZFS and CEPH have built-in fixity checks so that data is checked on a regular basis. If damage does occur, these systems have storage redundancy and self-healing features that can discern which copy of a file is correct and then overwrite the damaged one. If system-level auto checking is not an option, you can compare fixity logs using differencing tools such as Meld, DiffMerge, or Kaleidoscope, that can compare files and highlight the differences in checksum values. You can then work to repair/replace the corrupted file.

Formally establishing administrative roles, responsibilities, and authorizations for your systems would be a way to ensure that no one person has write access to all copies of a file. This would safeguard against accidental deletion. The three major operating systems, Windows, macOS, and Ubuntu, all have sophisticated file/folder permissions settings.

ZFS system stats via macOS terminal